Conway chained arrow notation: Difference between revisions

en>Chasrob |

en>Magioladitis →Properties: fix syntax |

||

| Line 1: | Line 1: | ||

In [[Vapnik–Chervonenkis theory|statistical learning theory]], or sometimes [[computational learning theory]], the '''VC dimension''' (for '''Vapnik–Chervonenkis dimension''') is a measure of the [[membership function (mathematics)|capacity]] of a [[statistical classification]] [[algorithm]], defined as the [[cardinality]] of the largest set of points that the algorithm can [[Shattering (machine learning)|shatter]]. It is a core concept in [[Vapnik–Chervonenkis theory]], and was originally defined by [[Vladimir Vapnik]] and [[Alexey Chervonenkis]]. | |||

Informally, the capacity of a classification model is related to how complicated it can be. For example, consider the [[Heaviside step function|thresholding]] of a high-[[degree of a polynomial|degree]] [[polynomial]]: if the polynomial evaluates above zero, that point is classified as positive, otherwise as negative. A high-degree polynomial can be wiggly, so it can fit a given set of training points well. But one can expect that the classifier will make errors on other points, because it is too wiggly. Such a polynomial has a high capacity. A much simpler alternative is to threshold a linear function. This function may not fit the training set well, because it has a low capacity. This notion of capacity is made more rigorous below. | |||

== Shattering == | |||

A classification model <math>f</math> with some parameter vector <math>\theta</math> is said to ''shatter'' a set of data points <math>(x_1,x_2,\ldots,x_n)</math> if, for all assignments of labels to those points, there exists a <math>\theta</math> such that the model <math>f</math> makes no errors when evaluating that set of data points. | |||

The VC dimension of a model <math>f</math> is the maximum number of points that can be arranged so that <math>f</math> shatters them. More formally, it is <math>h'</math> where <math>h'</math> is the maximum <math>h</math> such that some data point set of [[cardinality]] <math>h</math> can be shattered by <math>f</math>. | |||

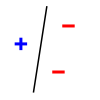

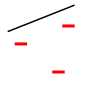

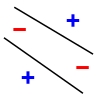

For example, consider a [[linear classifier|straight line]] as the classification model: the model used by a [[perceptron]]. The line should separate positive data points from negative data points. There exist sets of 3 points that can indeed be shattered using this model (any 3 points that are not collinear can be shattered). However, no set of 4 points can be shattered: by [[Radon's theorem]], any four points can be partitioned into two subsets with intersecting convex hulls, so it is not possible to separate one of these two subsets from the other. Thus, the VC dimension of this particular classifier is 3. It is important to remember that while one can choose any arrangement of points, the arrangement of those points cannot change when attempting to shatter for some label assignment. Note, only 3 of the 2<sup>3</sup> = 8 possible label assignments are shown for the three points. | |||

{| border="0" cellpadding="4" cellspacing="0" | |||

|- style="text-align:center;" | |||

| style="background:#dfd;"| [[File:VC1.svg]] | |||

| style="background:#dfd;"| [[File:VC2.svg]] | |||

| style="background:#dfd;"| [[File:VC3.svg]] | |||

| style="background:#fdd;"| [[File:VC4.svg]] | |||

|- style="text-align:center;" | |||

| colspan="3" style="background:#dfd;"| '''3 points shattered''' | |||

| style="background:#fdd;"| '''4 points impossible''' | |||

|} | |||

== Uses == | |||

The VC dimension has utility in statistical learning theory, because it can predict a [[probabilistic]] [[upper bound]] on the test error of a classification model. | |||

Vapnik <ref>Vapnik, Vladimir. The nature of statistical learning theory. springer, 2000.</ref> proved that the probability of the test error distancing from an upper bound (on data that is drawn [[Independent identically-distributed random variables|i.i.d.]] from the same distribution as the training set) is given by | |||

<math> | |||

P \left(\text{test error} \leq \text{training error} + \sqrt{h(\log(2N/h)+1)-\log(\eta/4)\over N} \right) = 1 - \eta | |||

</math> | |||

where <math>h</math> is the VC dimension of the classification model, and <math>N</math> is the size of the training set (restriction: this formula is valid when <math>h \ll N</math>). Similar complexity bounds can be derived using [[Rademacher complexity]], but Rademacher complexity can sometimes provide more insight than VC dimension calculations into such statistical methods such as those using [[kernel methods|kernels]]. | |||

In [[computational geometry]], VC dimension is one of the critical parameters in the size of [[E-net (computational geometry)|ε-nets]], which determines the complexity of approximation algorithms based on them; range sets without finite VC dimension may not have finite ε-nets at all. | |||

==See also== | |||

*[[Sauer–Shelah lemma]], a bound on the number of sets in a set system in terms of the VC dimension | |||

== References == | |||

<references/> | |||

* Andrew Moore's [http://www-2.cs.cmu.edu/~awm/tutorials/vcdim.html VC dimension tutorial] | |||

* Vapnik, Vladimir. "The nature of statistical learning theory". springer, 2000. | |||

* V. Vapnik and A. Chervonenkis. "On the uniform convergence of relative frequencies of events to their probabilities." ''Theory of Probability and its Applications'', 16(2):264–280, 1971. | |||

* A. Blumer, A. Ehrenfeucht, D. Haussler, and [[Manfred K. Warmuth|M. K. Warmuth]]. "Learnability and the Vapnik–Chervonenkis dimension." ''Journal of the ACM'', 36(4):929–865, 1989. | |||

* Christopher Burges Tutorial on SVMs for Pattern Recognition (containing information also for VC dimension) [http://citeseer.ist.psu.edu/burges98tutorial.html] | |||

* [[Bernard Chazelle]]. "The Discrepancy Method." [http://www.cs.princeton.edu/~chazelle/book.html] | |||

[[Category:Dimension]] | |||

[[Category:Statistical classification]] | |||

[[Category:Computational learning theory]] | |||

[[Category:Measures of complexity]] | |||

Revision as of 16:18, 12 January 2014

In statistical learning theory, or sometimes computational learning theory, the VC dimension (for Vapnik–Chervonenkis dimension) is a measure of the capacity of a statistical classification algorithm, defined as the cardinality of the largest set of points that the algorithm can shatter. It is a core concept in Vapnik–Chervonenkis theory, and was originally defined by Vladimir Vapnik and Alexey Chervonenkis.

Informally, the capacity of a classification model is related to how complicated it can be. For example, consider the thresholding of a high-degree polynomial: if the polynomial evaluates above zero, that point is classified as positive, otherwise as negative. A high-degree polynomial can be wiggly, so it can fit a given set of training points well. But one can expect that the classifier will make errors on other points, because it is too wiggly. Such a polynomial has a high capacity. A much simpler alternative is to threshold a linear function. This function may not fit the training set well, because it has a low capacity. This notion of capacity is made more rigorous below.

Shattering

A classification model with some parameter vector is said to shatter a set of data points if, for all assignments of labels to those points, there exists a such that the model makes no errors when evaluating that set of data points.

The VC dimension of a model is the maximum number of points that can be arranged so that shatters them. More formally, it is where is the maximum such that some data point set of cardinality can be shattered by .

For example, consider a straight line as the classification model: the model used by a perceptron. The line should separate positive data points from negative data points. There exist sets of 3 points that can indeed be shattered using this model (any 3 points that are not collinear can be shattered). However, no set of 4 points can be shattered: by Radon's theorem, any four points can be partitioned into two subsets with intersecting convex hulls, so it is not possible to separate one of these two subsets from the other. Thus, the VC dimension of this particular classifier is 3. It is important to remember that while one can choose any arrangement of points, the arrangement of those points cannot change when attempting to shatter for some label assignment. Note, only 3 of the 23 = 8 possible label assignments are shown for the three points.

|

|

|

|

| 3 points shattered | 4 points impossible | ||

Uses

The VC dimension has utility in statistical learning theory, because it can predict a probabilistic upper bound on the test error of a classification model.

Vapnik [1] proved that the probability of the test error distancing from an upper bound (on data that is drawn i.i.d. from the same distribution as the training set) is given by

where is the VC dimension of the classification model, and is the size of the training set (restriction: this formula is valid when ). Similar complexity bounds can be derived using Rademacher complexity, but Rademacher complexity can sometimes provide more insight than VC dimension calculations into such statistical methods such as those using kernels.

In computational geometry, VC dimension is one of the critical parameters in the size of ε-nets, which determines the complexity of approximation algorithms based on them; range sets without finite VC dimension may not have finite ε-nets at all.

See also

- Sauer–Shelah lemma, a bound on the number of sets in a set system in terms of the VC dimension

References

- ↑ Vapnik, Vladimir. The nature of statistical learning theory. springer, 2000.

- Andrew Moore's VC dimension tutorial

- Vapnik, Vladimir. "The nature of statistical learning theory". springer, 2000.

- V. Vapnik and A. Chervonenkis. "On the uniform convergence of relative frequencies of events to their probabilities." Theory of Probability and its Applications, 16(2):264–280, 1971.

- A. Blumer, A. Ehrenfeucht, D. Haussler, and M. K. Warmuth. "Learnability and the Vapnik–Chervonenkis dimension." Journal of the ACM, 36(4):929–865, 1989.

- Christopher Burges Tutorial on SVMs for Pattern Recognition (containing information also for VC dimension) [1]

- Bernard Chazelle. "The Discrepancy Method." [2]