Brun's theorem: Difference between revisions

→External links: Brun's Constant - MathWorld |

en>Arthur Rubin Reverted 1 edit by Pirokiazuma (talk): Revert blanking. (TW) |

||

| Line 1: | Line 1: | ||

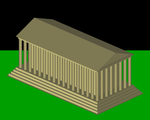

[[Image:7fin.png|thumbnail|right|Scene with shadow mapping]] | |||

[[Image:3noshadow.png|thumbnail|right|Scene with no shadows]] | |||

'''Shadow mapping''' or '''projective shadowing''' is a process by which [[shadow]]s are added to [[3D computer graphics]]. This concept was introduced by [[Lance Williams]] in 1978, in a paper entitled "Casting curved shadows on curved surfaces". Since then, it has been used both in pre-rendered scenes and realtime scenes in many console and PC games. | |||

Shadows are created by testing whether a [[pixel]] is visible from the light source, by comparing it to a [[z-buffering|z-buffer]] or ''depth'' image of the light source's view, stored in the form of a [[texture mapping|texture]]. | |||

==Principle of a shadow and a shadow map== | |||

If you looked out from a source of light, all of the objects you can see would appear in light. Anything behind those objects, however, would be in shadow. This is the basic principle used to create a shadow map. The light's view is rendered, storing the depth of every surface it sees (the shadow map). Next, the regular scene is rendered comparing the depth of every point drawn (as if it were being seen by the light, rather than the eye) to this depth map. | |||

This technique is less accurate than [[shadow volume]]s, but the shadow map can be a faster alternative depending on how much fill time is required for either technique in a particular application and therefore may be more suitable to real time applications. In addition, shadow maps do not require the use of an additional [[stencil buffer]], and can be modified to produce shadows with a soft edge. Unlike shadow volumes, however, the accuracy of a shadow map is limited by its resolution. | |||

== Algorithm overview == | |||

Rendering a shadowed scene involves two major drawing steps. The first produces the shadow map itself, and the second applies it to the scene. Depending on the implementation (and number of lights), this may require two or more drawing passes. | |||

===Creating the shadow map=== | |||

[[Image:1light.png|thumb|right|150px|Scene rendered from the light view.]] | |||

[[Image:2shadowmap.png|thumb|right|150px|Scene from the light view, depth map.]] | |||

The first step renders the scene from the light's point of view. For a point light source, the view should be a [[perspective projection]] as wide as its desired angle of effect (it will be a sort of square spotlight). For directional light (e.g., that from the [[Sun]]), an [[orthographic projection]] should be used. | |||

From this rendering, the depth buffer is extracted and saved. Because only the depth information is relevant, it is common to avoid updating the color buffers and disable all lighting and texture calculations for this rendering, in order to save drawing time. This [[depth map]] is often stored as a texture in graphics memory. | |||

This depth map must be updated any time there are changes to either the light or the objects in the scene, but can be reused in other situations, such as those where only the viewing camera moves. (If there are multiple lights, a separate depth map must be used for each light.) | |||

In many implementations it is practical to render only a subset of the objects in the scene to the shadow map in order to save some of the time it takes to redraw the map. Also, a depth offset which shifts the objects away from the light may be applied to the shadow map rendering in an attempt to resolve [[Z-fighting|stitching]] problems where the depth map value is close to the depth of a surface being drawn (i.e., the shadow casting surface) in the next step. Alternatively, culling front faces and only rendering the back of objects to the shadow map is sometimes used for a similar result. | |||

===Shading the scene=== | |||

The second step is to draw the scene from the usual [[perspective projection|camera]] viewpoint, applying the shadow map. This process has three major components, the first is to find the coordinates of the object as seen from the light, the second is the test which compares that coordinate against the depth map, and finally, once accomplished, the object must be drawn either in shadow or in light. | |||

====Light space coordinates==== | |||

[[Image:4overmap.png|thumb|right|150px|Visualization of the depth map projected onto the scene]] | |||

In order to test a point against the depth map, its position in the scene coordinates must be transformed into the equivalent position as seen by the light. This is accomplished by a [[matrix multiplication]]. The location of the object on the screen is determined by the usual [[coordinate transformation]], but a second set of coordinates must be generated to locate the object in light space. | |||

The matrix used to transform the world coordinates into the light's viewing coordinates is the same as the one used to render the shadow map in the first step (under [[OpenGL]] this is the product of the modelview and projection matrices). This will produce a set of [[homogeneous coordinates]] that need a perspective division (''see [[3D projection]]'') to become ''normalized device coordinates'', in which each component (''x'', ''y'', or ''z'') falls between −1 and 1 (if it is visible from the light view). Many implementations (such as OpenGL and [[Direct3D]]) require an additional ''scale and bias'' matrix multiplication to map those −1 to 1 values to 0 to 1, which are more usual coordinates for depth map (texture map) lookup. This scaling can be done before the perspective division, and is easily folded into the previous transformation calculation by multiplying that matrix with the following: | |||

<math> | |||

\begin{bmatrix} | |||

0.5 & 0 & 0 & 0.5 \\ | |||

0 & 0.5 & 0 & 0.5 \\ | |||

0 & 0 & 0.5 & 0.5 \\ | |||

0 & 0 & 0 & 1 \end{bmatrix} | |||

</math> | |||

If done with a [[shader]], or other graphics hardware extension, this transformation is usually applied at the vertex level, and the generated value is interpolated between other vertices, and passed to the fragment level. | |||

====Depth map test==== | |||

[[Image:5failed.png|thumb|right|150px|Depth map test failures.]] | |||

Once the light-space coordinates are found, the ''x'' and ''y'' values usually correspond to a location in the depth map texture, and the ''z'' value corresponds to its associated depth, which can now be tested against the depth map. | |||

If the ''z'' value is greater than the value stored in the depth map at the appropriate (''x'',''y'') location, the object is considered to be behind an occluding object, and should be marked as a ''failure'', to be drawn in shadow by the drawing process. Otherwise it should be drawn lit. | |||

If the (''x'',''y'') location falls outside the depth map, the programmer must either decide that the surface should be lit or shadowed by default (usually lit). | |||

In a [[shader]] implementation, this test would be done at the fragment level. Also, care needs to be taken when selecting the type of texture map storage to be used by the hardware: if interpolation cannot be done, the shadow will appear to have a sharp jagged edge (an effect that can be reduced with greater shadow map resolution). | |||

It is possible to modify the depth map test to produce shadows with a soft edge by using a range of values (based on the proximity to the edge of the shadow) rather than simply pass or fail. | |||

The shadow mapping technique can also be modified to draw a texture onto the lit regions, simulating the effect of a [[Video projector|projector]]. The picture above, captioned "visualization of the depth map projected onto the scene" is an example of such a process. | |||

====Drawing the scene==== | |||

[[Image:7fin.png|thumb|right|150px|Final scene, rendered with ambient shadows.]] | |||

Drawing the scene with shadows can be done in several different ways. If programmable [[shader]]s are available, the depth map test may be performed by a fragment shader which simply draws the object in shadow or lighted depending on the result, drawing the scene in a single pass (after an initial earlier pass to generate the shadow map). | |||

If shaders are not available, performing the depth map test must usually be implemented by some hardware extension (such as [http://www.opengl.org/registry/specs/ARB/shadow.txt ''GL_ARB_shadow'']), which usually do not allow a choice between two lighting models (lit and shadowed), and necessitate more rendering passes: | |||

#Render the entire scene in shadow. For the most common lighting models (''see [[Phong reflection model]]'') this should technically be done using only the ambient component of the light, but this is usually adjusted to also include a dim diffuse light to prevent curved surfaces from appearing flat in shadow. | |||

#Enable the depth map test, and render the scene lit. Areas where the depth map test fails will not be overwritten, and remain shadowed. | |||

#An additional pass may be used for each additional light, using additive [[Alpha blending|blending]] to combine their effect with the lights already drawn. (Each of these passes requires an additional previous pass to generate the associated shadow map.) | |||

The example pictures in this article used the [[OpenGL]] extension [http://www.opengl.org/registry/specs/ARB/shadow_ambient.txt ''GL_ARB_shadow_ambient''] to accomplish the shadow map process in two passes. | |||

== Shadow map real-time implementations == | |||

One of the key disadvantages of real time shadow mapping is that the size and depth of the shadow map determines the quality of the final shadows. This is usually visible as [[aliasing]] or shadow continuity glitches. A simple way to overcome this limitation is to increase the shadow map size, but due to memory, computational or hardware constraints, it is not always possible. Commonly used techniques for real-time shadow mapping have been developed to circumvent this limitation. These include Cascaded Shadow Maps,<ref> | |||

{{citation | |||

| title = Cascaded shadow maps | |||

| publisher = NVidia | |||

| url = http://developer.download.nvidia.com/SDK/10.5/opengl/src/cascaded_shadow_maps/doc/cascaded_shadow_maps.pdf | |||

| accessdate = 2008-02-14 | |||

}} | |||

</ref> Trapezoidal Shadow Maps,<ref> | |||

{{cite paper | |||

| title = Anti-aliasing and Continuity with Trapezoidal Shadow Maps | |||

| author = Tobias Martin, Tiow-Seng Tan | |||

| url = http://www.comp.nus.edu.sg/~tants/tsm.html | |||

| accessdate = 2008-02-14 | |||

}} | |||

</ref> Light Space Perspective Shadow maps,<ref> | |||

{{cite paper | |||

| title = Light Space Perspective Shadow Maps | |||

| author = Michael Wimmer, Daniel Scherzer, Werner Purgathofer | |||

| url = http://www.cg.tuwien.ac.at/research/vr/lispsm/ | |||

| accessdate = 2008-02-14 | |||

}} | |||

</ref> or Parallel-Split Shadow maps.<ref> | |||

{{cite paper | |||

| title = Parallel-Split Shadow Maps on Programmable GPUs | |||

| author = Fan Zhang, Hanqiu Sun, Oskari Nyman | |||

| url = http://appsrv.cse.cuhk.edu.hk/~fzhang/pssm_project/ | |||

| accessdate = 2008-02-14 | |||

}}</ref> | |||

Also notable is that generated shadows, even if [[aliasing]] free, have hard edges, which is not always desirable. In order to emulate real world soft shadows, several solutions have been developed, either by doing several lookups on the shadow map, generating geometry meant to emulate the soft edge or creating non standard depth shadow maps. Notable examples of these are Percentage Closer Filtering,<ref> | |||

{{cite web | |||

| title = Shadow Map Antialiasing | |||

| publisher = NVidia | |||

| url = http://http.developer.nvidia.com/GPUGems/gpugems_ch11.html | |||

| accessdate = 2008-02-14 | |||

}}</ref> Smoothies,<ref> | |||

{{cite paper | |||

| title = Rendering Fake Soft Shadows with Smoothies | |||

| author = Eric Chan, Fredo Durand, [[Marco Corbetta]] | |||

| url = http://people.csail.mit.edu/ericchan/papers/smoothie/ | |||

| accessdate = 2008-02-14 | |||

}}</ref> and Variance Shadow maps.<ref> | |||

{{cite web | |||

| title = Variance Shadow Maps | |||

| author = William Donnelly, Andrew Lauritzen | |||

| url = http://www.punkuser.net/vsm/ | |||

| accessdate = 2008-02-14 | |||

}}</ref> | |||

== Shadow mapping techniques == | |||

=== Simple === | |||

*SSM "Simple" | |||

=== Splitting === | |||

*PSSM "Parallel Split" [http://http.developer.nvidia.com/GPUGems3/gpugems3_ch10.html http://http.developer.nvidia.com/GPUGems3/gpugems3_ch10.html] | |||

*CSM "Cascaded" [http://developer.download.nvidia.com/SDK/10.5/opengl/src/cascaded_shadow_maps/doc/cascaded_shadow_maps.pdf http://developer.download.nvidia.com/SDK/10.5/opengl/src/cascaded_shadow_maps/doc/cascaded_shadow_maps.pdf] | |||

=== Warping === | |||

*LiSPSM "Light Space Perspective" [http://www.cg.tuwien.ac.at/~scherzer/files/papers/LispSM_survey.pdf http://www.cg.tuwien.ac.at/~scherzer/files/papers/LispSM_survey.pdf] | |||

*TSM "Trapezoid" [http://www.comp.nus.edu.sg/~tants/tsm.html http://www.comp.nus.edu.sg/~tants/tsm.html] | |||

*PSM "Perspective" [http://www-sop.inria.fr/reves/Marc.Stamminger/psm/ http://www-sop.inria.fr/reves/Marc.Stamminger/psm/] | |||

*CSSM "Camera Space" [http://bib.irb.hr/datoteka/570987.12_CSSM.pdf http://bib.irb.hr/datoteka/570987.12_CSSM.pdf] | |||

=== Smoothing === | |||

*PCF "Percentage Closer Filtering" [http://http.developer.nvidia.com/GPUGems/gpugems_ch11.html http://http.developer.nvidia.com/GPUGems/gpugems_ch11.html] | |||

=== Filtering === | |||

*ESM "Exponential" [http://www.thomasannen.com/pub/gi2008esm.pdf http://www.thomasannen.com/pub/gi2008esm.pdf] | |||

*CSM "Convolution" [http://research.edm.uhasselt.be/~tmertens/slides/csm.ppt http://research.edm.uhasselt.be/~tmertens/slides/csm.ppt] | |||

*VSM "Variance" [http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.104.2569&rep=rep1&type=pdf http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.104.2569&rep=rep1&type=pdf] | |||

*SAVSM "Summed Area Variance" [http://http.developer.nvidia.com/GPUGems3/gpugems3_ch08.html http://http.developer.nvidia.com/GPUGems3/gpugems3_ch08.html] | |||

*SMSR "Shadow Map Silhouette Revectorization" [http://bondarev.nl/?p=326 http://bondarev.nl/?p=326] | |||

=== Soft Shadows === | |||

*PCSS "Percentage Closer" [http://developer.download.nvidia.com/shaderlibrary/docs/shadow_PCSS.pdf http://developer.download.nvidia.com/shaderlibrary/docs/shadow_PCSS.pdf] | |||

*SSSS "Screen space soft shadows" [http://www.crcnetbase.com/doi/abs/10.1201/b10648-36 http://www.crcnetbase.com/doi/abs/10.1201/b10648-36] | |||

*FIV "Fullsphere Irradiance Vector" [http://getlab.org/publications/FIV/ http://getlab.org/publications/FIV/] | |||

=== Assorted === | |||

*ASM "Adaptive" [http://www.cs.cornell.edu/~kb/publications/ASM.pdf http://www.cs.cornell.edu/~kb/publications/ASM.pdf] | |||

*AVSM "Adaptive Volumetric" [http://visual-computing.intel-research.net/art/publications/avsm/ http://visual-computing.intel-research.net/art/publications/avsm/] | |||

*CSSM "Camera Space" [http://free-zg.t-com.hr/cssm/ http://free-zg.t-com.hr/cssm/] | |||

*DASM "Deep Adaptive" | |||

*DPSM "Dual Paraboloid" [http://sites.google.com/site/osmanbrian2/dpsm.pdf http://sites.google.com/site/osmanbrian2/dpsm.pdf] | |||

*DSM "Deep" [http://graphics.pixar.com/library/DeepShadows/paper.pdf http://graphics.pixar.com/library/DeepShadows/paper.pdf] | |||

*FSM "Forward" [http://www.cs.unc.edu/~zhangh/technotes/shadow/shadow.ps http://www.cs.unc.edu/~zhangh/technotes/shadow/shadow.ps] | |||

*LPSM "Logarithmic" [http://gamma.cs.unc.edu/LOGSM/ http://gamma.cs.unc.edu/LOGSM/] | |||

*MDSM "Multiple Depth" [http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.59.3376&rep=rep1&type=pdf http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.59.3376&rep=rep1&type=pdf] | |||

*RTW "Rectilinear" [http://www.cspaul.com/wiki/doku.php?id=publications:rosen.2012.i3d http://www.cspaul.com/wiki/doku.php?id=publications:rosen.2012.i3d] | |||

*RMSM "Resolution Matched" [http://www.idav.ucdavis.edu/func/return_pdf?pub_id=919 http://www.idav.ucdavis.edu/func/return_pdf?pub_id=919] | |||

*SDSM "Sample Distribution" [http://visual-computing.intel-research.net/art/publications/sdsm/ http://visual-computing.intel-research.net/art/publications/sdsm/] | |||

*SPPSM "Separating Plane Perspective" [http://jgt.akpeters.com/papers/Mikkelsen07/sep_math.pdf http://jgt.akpeters.com/papers/Mikkelsen07/sep_math.pdf] | |||

*SSSM "Shadow Silhouette" [http://graphics.stanford.edu/papers/silmap/silmap.pdf http://graphics.stanford.edu/papers/silmap/silmap.pdf] | |||

==See also== | |||

* [[Shadow volume]], another shadowing technique | |||

* [[Ray casting]], a slower technique often used in [[Ray tracing (graphics)|ray tracing]] | |||

* [[Photon mapping]], a much slower technique capable of very realistic lighting | |||

* [[Radiosity (3D computer graphics)|Radiosity]], another very slow but very realistic technique | |||

==Further reading== | |||

* [http://www.whdeboer.com/papers/smooth_penumbra_trans.pdf Smooth Penumbra Transitions with Shadow Maps] Willem H. de Boer | |||

* [http://www.cs.unc.edu/~zhangh/shadow.html Forward shadow mapping] does the shadow test in eye-space rather than light-space to keep texture access more sequential. | |||

* [http://www.gamerendering.com/category/shadows/shadow-mapping/ Shadow mapping techniques] An overview of different shadow mapping techniques | |||

==References== | |||

<references /> | |||

==External links== | |||

* [http://developer.nvidia.com/attach/8456 Hardware Shadow Mapping], [[NVIDIA|nVidia]] | |||

* [http://developer.nvidia.com/attach/6769 Shadow Mapping with Today's OpenGL Hardware], nVidia | |||

* [http://www.riemers.net/Tutorials/DirectX/Csharp3/index.php Riemer's step-by-step tutorial implementing Shadow Mapping with HLSL and DirectX] | |||

* [http://developer.nvidia.com/object/doc_shadows.html NVIDIA Real-time Shadow Algorithms and Techniques] | |||

* [http://www.embege.com/shadowmapping Shadow Mapping implementation using Java and OpenGL] | |||

{{DEFAULTSORT:Shadow Mapping}} | |||

[[Category:Shading]] | |||

Revision as of 09:55, 24 June 2013

Shadow mapping or projective shadowing is a process by which shadows are added to 3D computer graphics. This concept was introduced by Lance Williams in 1978, in a paper entitled "Casting curved shadows on curved surfaces". Since then, it has been used both in pre-rendered scenes and realtime scenes in many console and PC games.

Shadows are created by testing whether a pixel is visible from the light source, by comparing it to a z-buffer or depth image of the light source's view, stored in the form of a texture.

Principle of a shadow and a shadow map

If you looked out from a source of light, all of the objects you can see would appear in light. Anything behind those objects, however, would be in shadow. This is the basic principle used to create a shadow map. The light's view is rendered, storing the depth of every surface it sees (the shadow map). Next, the regular scene is rendered comparing the depth of every point drawn (as if it were being seen by the light, rather than the eye) to this depth map.

This technique is less accurate than shadow volumes, but the shadow map can be a faster alternative depending on how much fill time is required for either technique in a particular application and therefore may be more suitable to real time applications. In addition, shadow maps do not require the use of an additional stencil buffer, and can be modified to produce shadows with a soft edge. Unlike shadow volumes, however, the accuracy of a shadow map is limited by its resolution.

Algorithm overview

Rendering a shadowed scene involves two major drawing steps. The first produces the shadow map itself, and the second applies it to the scene. Depending on the implementation (and number of lights), this may require two or more drawing passes.

Creating the shadow map

The first step renders the scene from the light's point of view. For a point light source, the view should be a perspective projection as wide as its desired angle of effect (it will be a sort of square spotlight). For directional light (e.g., that from the Sun), an orthographic projection should be used.

From this rendering, the depth buffer is extracted and saved. Because only the depth information is relevant, it is common to avoid updating the color buffers and disable all lighting and texture calculations for this rendering, in order to save drawing time. This depth map is often stored as a texture in graphics memory.

This depth map must be updated any time there are changes to either the light or the objects in the scene, but can be reused in other situations, such as those where only the viewing camera moves. (If there are multiple lights, a separate depth map must be used for each light.)

In many implementations it is practical to render only a subset of the objects in the scene to the shadow map in order to save some of the time it takes to redraw the map. Also, a depth offset which shifts the objects away from the light may be applied to the shadow map rendering in an attempt to resolve stitching problems where the depth map value is close to the depth of a surface being drawn (i.e., the shadow casting surface) in the next step. Alternatively, culling front faces and only rendering the back of objects to the shadow map is sometimes used for a similar result.

Shading the scene

The second step is to draw the scene from the usual camera viewpoint, applying the shadow map. This process has three major components, the first is to find the coordinates of the object as seen from the light, the second is the test which compares that coordinate against the depth map, and finally, once accomplished, the object must be drawn either in shadow or in light.

Light space coordinates

In order to test a point against the depth map, its position in the scene coordinates must be transformed into the equivalent position as seen by the light. This is accomplished by a matrix multiplication. The location of the object on the screen is determined by the usual coordinate transformation, but a second set of coordinates must be generated to locate the object in light space.

The matrix used to transform the world coordinates into the light's viewing coordinates is the same as the one used to render the shadow map in the first step (under OpenGL this is the product of the modelview and projection matrices). This will produce a set of homogeneous coordinates that need a perspective division (see 3D projection) to become normalized device coordinates, in which each component (x, y, or z) falls between −1 and 1 (if it is visible from the light view). Many implementations (such as OpenGL and Direct3D) require an additional scale and bias matrix multiplication to map those −1 to 1 values to 0 to 1, which are more usual coordinates for depth map (texture map) lookup. This scaling can be done before the perspective division, and is easily folded into the previous transformation calculation by multiplying that matrix with the following:

If done with a shader, or other graphics hardware extension, this transformation is usually applied at the vertex level, and the generated value is interpolated between other vertices, and passed to the fragment level.

Depth map test

Once the light-space coordinates are found, the x and y values usually correspond to a location in the depth map texture, and the z value corresponds to its associated depth, which can now be tested against the depth map.

If the z value is greater than the value stored in the depth map at the appropriate (x,y) location, the object is considered to be behind an occluding object, and should be marked as a failure, to be drawn in shadow by the drawing process. Otherwise it should be drawn lit.

If the (x,y) location falls outside the depth map, the programmer must either decide that the surface should be lit or shadowed by default (usually lit).

In a shader implementation, this test would be done at the fragment level. Also, care needs to be taken when selecting the type of texture map storage to be used by the hardware: if interpolation cannot be done, the shadow will appear to have a sharp jagged edge (an effect that can be reduced with greater shadow map resolution).

It is possible to modify the depth map test to produce shadows with a soft edge by using a range of values (based on the proximity to the edge of the shadow) rather than simply pass or fail.

The shadow mapping technique can also be modified to draw a texture onto the lit regions, simulating the effect of a projector. The picture above, captioned "visualization of the depth map projected onto the scene" is an example of such a process.

Drawing the scene

Drawing the scene with shadows can be done in several different ways. If programmable shaders are available, the depth map test may be performed by a fragment shader which simply draws the object in shadow or lighted depending on the result, drawing the scene in a single pass (after an initial earlier pass to generate the shadow map).

If shaders are not available, performing the depth map test must usually be implemented by some hardware extension (such as GL_ARB_shadow), which usually do not allow a choice between two lighting models (lit and shadowed), and necessitate more rendering passes:

- Render the entire scene in shadow. For the most common lighting models (see Phong reflection model) this should technically be done using only the ambient component of the light, but this is usually adjusted to also include a dim diffuse light to prevent curved surfaces from appearing flat in shadow.

- Enable the depth map test, and render the scene lit. Areas where the depth map test fails will not be overwritten, and remain shadowed.

- An additional pass may be used for each additional light, using additive blending to combine their effect with the lights already drawn. (Each of these passes requires an additional previous pass to generate the associated shadow map.)

The example pictures in this article used the OpenGL extension GL_ARB_shadow_ambient to accomplish the shadow map process in two passes.

Shadow map real-time implementations

One of the key disadvantages of real time shadow mapping is that the size and depth of the shadow map determines the quality of the final shadows. This is usually visible as aliasing or shadow continuity glitches. A simple way to overcome this limitation is to increase the shadow map size, but due to memory, computational or hardware constraints, it is not always possible. Commonly used techniques for real-time shadow mapping have been developed to circumvent this limitation. These include Cascaded Shadow Maps,[1] Trapezoidal Shadow Maps,[2] Light Space Perspective Shadow maps,[3] or Parallel-Split Shadow maps.[4]

Also notable is that generated shadows, even if aliasing free, have hard edges, which is not always desirable. In order to emulate real world soft shadows, several solutions have been developed, either by doing several lookups on the shadow map, generating geometry meant to emulate the soft edge or creating non standard depth shadow maps. Notable examples of these are Percentage Closer Filtering,[5] Smoothies,[6] and Variance Shadow maps.[7]

Shadow mapping techniques

Simple

- SSM "Simple"

Splitting

- PSSM "Parallel Split" http://http.developer.nvidia.com/GPUGems3/gpugems3_ch10.html

- CSM "Cascaded" http://developer.download.nvidia.com/SDK/10.5/opengl/src/cascaded_shadow_maps/doc/cascaded_shadow_maps.pdf

Warping

- LiSPSM "Light Space Perspective" http://www.cg.tuwien.ac.at/~scherzer/files/papers/LispSM_survey.pdf

- TSM "Trapezoid" http://www.comp.nus.edu.sg/~tants/tsm.html

- PSM "Perspective" http://www-sop.inria.fr/reves/Marc.Stamminger/psm/

- CSSM "Camera Space" http://bib.irb.hr/datoteka/570987.12_CSSM.pdf

Smoothing

- PCF "Percentage Closer Filtering" http://http.developer.nvidia.com/GPUGems/gpugems_ch11.html

Filtering

- ESM "Exponential" http://www.thomasannen.com/pub/gi2008esm.pdf

- CSM "Convolution" http://research.edm.uhasselt.be/~tmertens/slides/csm.ppt

- VSM "Variance" http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.104.2569&rep=rep1&type=pdf

- SAVSM "Summed Area Variance" http://http.developer.nvidia.com/GPUGems3/gpugems3_ch08.html

- SMSR "Shadow Map Silhouette Revectorization" http://bondarev.nl/?p=326

Soft Shadows

- PCSS "Percentage Closer" http://developer.download.nvidia.com/shaderlibrary/docs/shadow_PCSS.pdf

- SSSS "Screen space soft shadows" http://www.crcnetbase.com/doi/abs/10.1201/b10648-36

- FIV "Fullsphere Irradiance Vector" http://getlab.org/publications/FIV/

Assorted

- ASM "Adaptive" http://www.cs.cornell.edu/~kb/publications/ASM.pdf

- AVSM "Adaptive Volumetric" http://visual-computing.intel-research.net/art/publications/avsm/

- CSSM "Camera Space" http://free-zg.t-com.hr/cssm/

- DASM "Deep Adaptive"

- DPSM "Dual Paraboloid" http://sites.google.com/site/osmanbrian2/dpsm.pdf

- DSM "Deep" http://graphics.pixar.com/library/DeepShadows/paper.pdf

- FSM "Forward" http://www.cs.unc.edu/~zhangh/technotes/shadow/shadow.ps

- LPSM "Logarithmic" http://gamma.cs.unc.edu/LOGSM/

- MDSM "Multiple Depth" http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.59.3376&rep=rep1&type=pdf

- RTW "Rectilinear" http://www.cspaul.com/wiki/doku.php?id=publications:rosen.2012.i3d

- RMSM "Resolution Matched" http://www.idav.ucdavis.edu/func/return_pdf?pub_id=919

- SDSM "Sample Distribution" http://visual-computing.intel-research.net/art/publications/sdsm/

- SPPSM "Separating Plane Perspective" http://jgt.akpeters.com/papers/Mikkelsen07/sep_math.pdf

- SSSM "Shadow Silhouette" http://graphics.stanford.edu/papers/silmap/silmap.pdf

See also

- Shadow volume, another shadowing technique

- Ray casting, a slower technique often used in ray tracing

- Photon mapping, a much slower technique capable of very realistic lighting

- Radiosity, another very slow but very realistic technique

Further reading

- Smooth Penumbra Transitions with Shadow Maps Willem H. de Boer

- Forward shadow mapping does the shadow test in eye-space rather than light-space to keep texture access more sequential.

- Shadow mapping techniques An overview of different shadow mapping techniques

References

- ↑

Many property agents need to declare for the PIC grant in Singapore. However, not all of them know find out how to do the correct process for getting this PIC scheme from the IRAS. There are a number of steps that you need to do before your software can be approved.

Naturally, you will have to pay a safety deposit and that is usually one month rent for annually of the settlement. That is the place your good religion deposit will likely be taken into account and will kind part or all of your security deposit. Anticipate to have a proportionate amount deducted out of your deposit if something is discovered to be damaged if you move out. It's best to you'll want to test the inventory drawn up by the owner, which can detail all objects in the property and their condition. If you happen to fail to notice any harm not already mentioned within the inventory before transferring in, you danger having to pay for it yourself.

In case you are in search of an actual estate or Singapore property agent on-line, you simply should belief your intuition. It's because you do not know which agent is nice and which agent will not be. Carry out research on several brokers by looking out the internet. As soon as if you end up positive that a selected agent is dependable and reliable, you can choose to utilize his partnerise in finding you a home in Singapore. Most of the time, a property agent is taken into account to be good if he or she locations the contact data on his website. This may mean that the agent does not mind you calling them and asking them any questions relating to new properties in singapore in Singapore. After chatting with them you too can see them in their office after taking an appointment.

Have handed an trade examination i.e Widespread Examination for House Brokers (CEHA) or Actual Property Agency (REA) examination, or equal; Exclusive brokers are extra keen to share listing information thus making certain the widest doable coverage inside the real estate community via Multiple Listings and Networking. Accepting a severe provide is simpler since your agent is totally conscious of all advertising activity related with your property. This reduces your having to check with a number of agents for some other offers. Price control is easily achieved. Paint work in good restore-discuss with your Property Marketing consultant if main works are still to be done. Softening in residential property prices proceed, led by 2.8 per cent decline within the index for Remainder of Central Region

Once you place down the one per cent choice price to carry down a non-public property, it's important to accept its situation as it is whenever you move in – faulty air-con, choked rest room and all. Get round this by asking your agent to incorporate a ultimate inspection clause within the possibility-to-buy letter. HDB flat patrons routinely take pleasure in this security net. "There's a ultimate inspection of the property two days before the completion of all HDB transactions. If the air-con is defective, you can request the seller to repair it," says Kelvin.

15.6.1 As the agent is an intermediary, generally, as soon as the principal and third party are introduced right into a contractual relationship, the agent drops out of the image, subject to any problems with remuneration or indemnification that he could have against the principal, and extra exceptionally, against the third occasion. Generally, agents are entitled to be indemnified for all liabilities reasonably incurred within the execution of the brokers´ authority.

To achieve the very best outcomes, you must be always updated on market situations, including past transaction information and reliable projections. You could review and examine comparable homes that are currently available in the market, especially these which have been sold or not bought up to now six months. You'll be able to see a pattern of such report by clicking here It's essential to defend yourself in opposition to unscrupulous patrons. They are often very skilled in using highly unethical and manipulative techniques to try and lure you into a lure. That you must also protect your self, your loved ones, and personal belongings as you'll be serving many strangers in your home. Sign a listing itemizing of all of the objects provided by the proprietor, together with their situation. HSR Prime Recruiter 2010 - ↑ Template:Cite paper

- ↑ Template:Cite paper

- ↑ Template:Cite paper

- ↑ Template:Cite web

- ↑ Template:Cite paper

- ↑ Template:Cite web